Projects

DEEP

Field Campaign Explorer

VISAGE

Unity Crowd Renderer

Flipped

Mrita Sikar

Dimension 223

BatSS

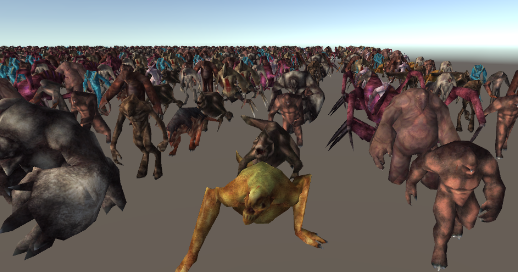

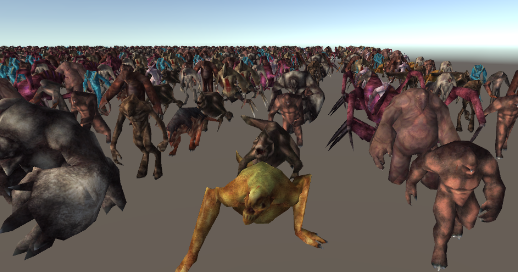

Unity Crowd Renderer

Real-Time Large Crowd Rendering in Unity using Parallel LOD Technique.

Independent Study during Master's programme.

Supervisor: Dr. Chao Peng

This project is based on the paper Real-Time Large Crowd Rendering with Efficient Character and Instance Management on GPU by Peng and Dong. The original paper implemented an efficient rendering of a large animated crowd in real-time using CUDA and OpenGL. I and my partner worked with the authors to implement the same technique in the Unity Game Engine but this time using compute shaders.

Briefly, here’s what we did on this project:

- Level-of-detail meshes were continuously generated each frame for each instance in the scene based on the distance to the camera

- The LOD computation and mesh regeneration process were done in a highly parallel fashion in the GPU using compute shaders and then rendered using vertex and fragment shaders

- Frustum culling with bounding spheres was used to increase efficiency of the overall process

The final result was enough to achieve an FPS of 30 with ten thousand instances and a total of 56,286,540 triangles. This is a great feat, considering how the same scene using only the Unity game engine and no LOD will have an FPS of 4. These data are collected in a machine with the following specs:

Machine model: Dell XPS 15

Processor: i7 6th gen

GPU: Nvidia GeForce GTX 960M, 2 GB

Dimension 223

2D to 3D Conversion.

Bachelor’s Senior Research Project.

Supervisor: Dr. Jyoti Tandukar

This project was done by a team of 4 during my Bachelor’s senior year. Though we originally intended to work on a partial 3D reconstruction from an image using machine learning techniques, due to limited resources and time, our supervisor advised us to limit the project scope to something small.

We ended up doing research on different ways to obtain depth information from 2D images and using the reverse of simple pinhole-camera mathematics to (partially) reconstruct the 3D scene. Following techniques were tested out for obtaining the depth maps:

- Stereo images

- OpenCV was used to estimate the depth of different points in the 2D image based on the distance moved between the left and right images.

- Kinect

- Probably the best approach, the IR sensor in the kinect could directly generate a depth map of the scene that it captures.

- Lens Blur

- This was our major approach as it was easily available to people with an android phone. The Lens Blur camera by Google can be used to achieve shallow depth of field effect. We decoded and reused the depth information encoded in the pictures taken from this application for our purposes.

The final reconstructed scene was also available to view in Virtual Reality.

Flipped

2D Platform Adventure Game for Android devices.

Finalist in Games and Entertainment section of Ncell App Camp 2015.

Flipped is a game where a boy travels through mirror to different dimensions to save his lost dog. Each alternating dimension is flipped from the previous dimension in the sense that everything from gravity to user controls is inverted. The overall goal of the game is to keep moving forward, solving puzzles and avoiding obstacles. The flipped dimensions make it challenging for users as it fights their initial instinct on how to move the character.

The game was constructed in Java. Box2D was used for simulating physics in the game. The artworks were developed by my team and the graphics were programmed using OpenGL ES. A game engine using the concept of ECS (Entity-Component-System) was developed which actually was really flexible and made it really easy to design and develop new puzzles, levels and features in the game.

BatSS

Blind Signal Separation.

Bachelor’s Junior Research Project.

Developed by a team of four, this was a small endeavor to create an application to separate the voices of two people from a mixed recording. To achieve this, we tried out a backpropagation neural network and train it using our own voices. The input audio needed to be a stereo recording and was converted to a frequency domain before feeding into the neural network. The neural network was implemented from scratch using OpenCL for GPU parallelization. The final solution did work well with our own voices but did not work with other people’s voices so well. We did further research on using recurrent neural networks and obtaining more training data so as to achieve better performance. While still on hold, we plan to complete a better sound separating application in the future based on our research.

Mrita Sikar

3D Third-Person Shooter Game.

Best academic project in Object-Oriented Programming.

This was developed by a team of two in my sophomore year. It is a zombie hunting game that we two developed completely from scratch. The game engine that we developed uses modern OpenGL and supports several features such as shadow mapping, skinned animation, collision detection (using octrees for broad phase and separating axes test for narrow phase) and potential energy functions for simulating chasing and avoiding in the zombies. The overall game was good looking and is one of the few projects that I am really proud of considering the small amount of time we had for working on it (and considering that we did not use many libraries and implemented most of the engine by ourselves). However, we did not get to spend much time in level design and had very few 3D models to use. So the game is still incomplete as far as its playability is concerned.

Field Campaign Explorer

Parallel cloud architecture for rendering very large 3D point clouds.

FCX Poster

Worked on a team with:

Global Hydrology Resource Center, The University of Alabama in Huntsville

The Field Campaign Explorer allows data exploration, discovery and visualization of scientific datasets collected through flights, satellites as well as ground-based instruments. It visualizes very large datasets in 3D including some time-based animation of data points.

The main obstacle in developing this tool was the large size of datasets. The overall process required the system to query a subset of the datasets from the database, generate 3D geometries based on the queried data, stream the 3D outputs to web frontend and then visualize these outputs in the browser. Each of these steps presented a challenge.

To solve our problems, we used Zarr-data storage technology to first store the original data in the cloud that could be downloaded in chunks. With dask and its lazy loading functions, a small chunk of data could be efficiently queried and converted to a 3D point cloud format that the Cesium 3D library supports. A cluster of ECS fargate containers was set up in the cloud to parallelly query and render multiple chunks of points clouds. Each of these chunks is rendered in multiple levels of detail. The final point cloud that is visualized is then rendered in chunks, each chunk with different levels of detail to improve the performance.

As part of a bigger team, I worked on building the cluster-based server architecture which was responsible for parallelly querying the datasets and generating point clouds in chunks that could be served in the frontend for analysis and visualization.

VISAGE

A Visualization and Exploration Framework for Environmental Data.

Worked on a team with:

Global Hydrology Resource Center, The University of Alabama in Huntsville

As part of a bigger team, I worked on developing a serverless backend with AWS to ingest and query large datasets for 3D visualization systems.

DEEP

A semi-automated data analysis and visualization tool developed for the humanitarian community, funded by various UN agencies, the IFRC, and other INGOs.

DEEP Introduction

Worked on a team with:

Togglecorp

DEEP is used all over the world by various agencies to collect, analyse and visualize data using planned frameworks.

I was involved in both backend and frontend development of DEEP and had hands-on experience designing backend architecture of the system as well as developing front-end components. I worked on this project along with some others at Togglecorp for more than two years, which helped build my full-stack engineering skills quite a lot.

Select a project to view its details.